So many moons ago, I had an idea. To give GPT a prompt to write me a whole book based on an idea I had. Hey GPT, write me a 30-chapter book in one go about this idea I have. Sure, said GPT, the best I can do is 500 words and some encouragement to go write the book myself. I decided to try and be smart and ask GPT to come up with only the main plot points of each chapter. Then I would take each of those plot points and ask GPT to write me a 1,000-word chapter based on only one chapter. Sure, said GPT, here is the first chapter. Awesome, I said. Now do the next chapter. Sure, GPT said, here is a chapter with the main character planning a wedding and then living happily ever after. Rinse and repeat with several iterations of this, and you get a random pile of words that does not resemble a coherent book in any way, shape, or form. I then put this idea to rest, waiting for bigger and better models to come out. Mind you, I did try this with GPT 3.5. Now, anybody will laugh at the idea of using GPT 3.5 to do anything, and I think it’s not even available via the OpenAI website anymore, but back in the day, that was the state-of-the-art LLM, and everybody was amazed at what it could do – or not do, but yes… Putting this to rest and working on other projects was my plan, until recently.

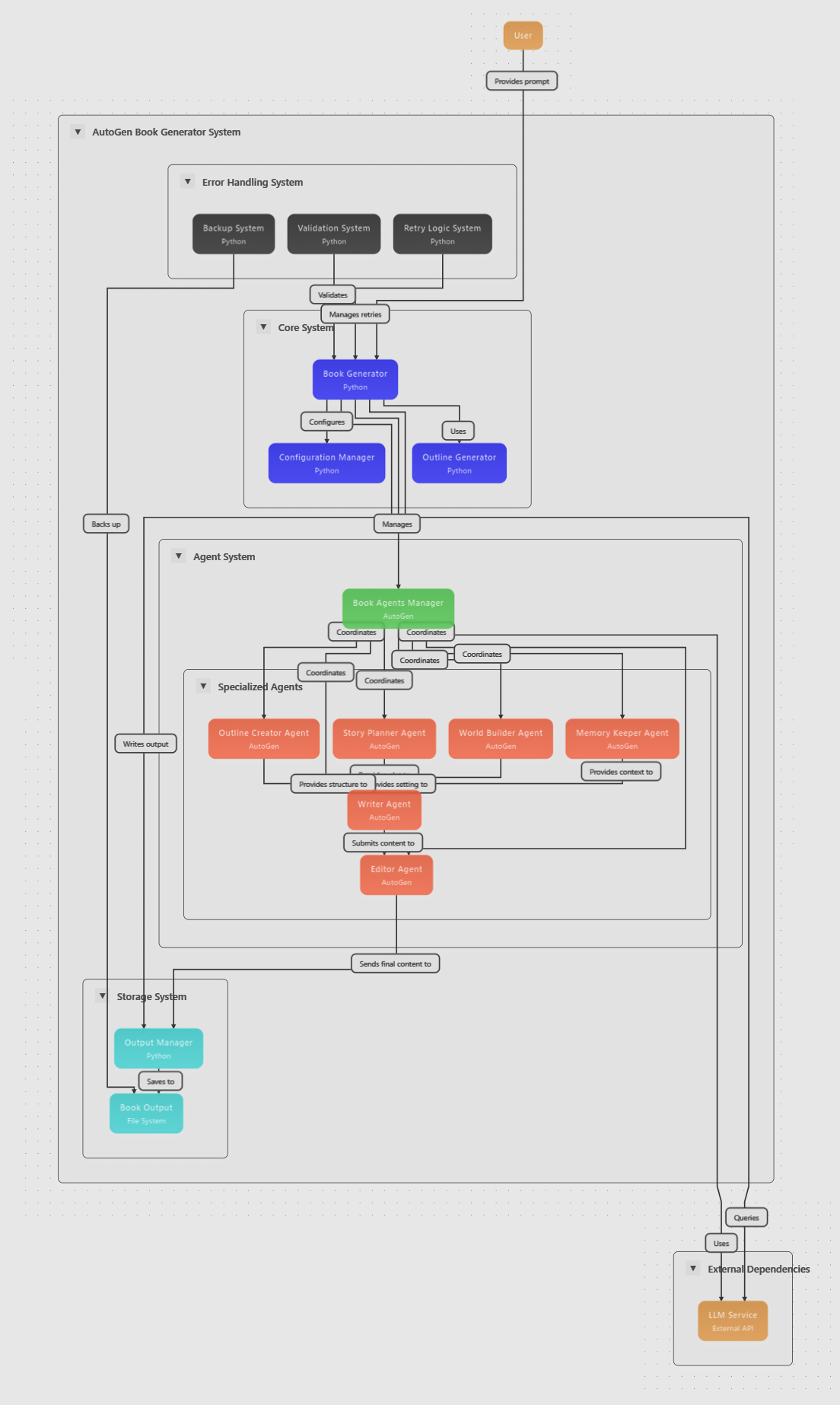

Along came the idea of agents. What is an agent, you may ask? Well, imagine a version of, say, GPT4, but with their own personality and knowledge base. One agent to do spell checking, another to make sure the storyline is intact. Yet another to perhaps make sure all other agents talk nicely to each other and keep a mental note of what the others are doing. Basically, you end up with a bunch of AI’s that are experts in their “field” or goal. Instead of one AI doing all the work, you split the work up into multiple agents, and together, you get much better results than just one doing all the work.

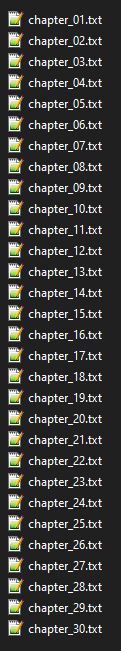

I was planning to write something like this to see if one can get a couple of AI’s to write a full-length book that will actually stick to the storyline and plot and not go off the rails halfway through. However, turns out someone already did. I came across a GitHub repo from Adam W. Larson (https://github.com/adamwlarson/ai-book-writer) that can basically do just that. I was eager to give it a try. My first couple of tests did not pan out perfectly, but the idea was there. I tinkered with the code a bit, adding my own rules, parameters, and some code tweaks here and there, and voilà. A semi-decent script that spawns AI agents to write a full-length book. All you need to do is give it a very detailed initial prompt and set it on its way. After a couple of bug fixes and test runs, I decided to create a 30-chapter book! This was not a quick thing to do, I must say. Creating the text for the book alone took about 8 hours. Keep in mind I was using a local LLM instead of a cloud-based one like GPT, as this would, I suspect, cost a couple of dollars due to all the back-and-forth that all the AI agents were having. But in the end, I got what I wanted: 30 chapters based on the concept I laid out! How did this all come to be, you may ask? Well, for the more technical, nerdy people out there, here is a layout of the process.

1. Initial Setup and Configuration

– The system loads configuration settings from config.py

– An initial book prompt is provided, which describes the story concept, world, characters, and narrative structure

– The number of chapters is defined (30 in the example)

2. Agent Creation and Initialization

– The BookAgents class creates specialized AI agents with specific roles:

– Memory Keeper: Tracks story continuity, character development, and world-building consistency

– Story Planner: Focuses on high-level narrative structure and plot points

– Outline Creator: Generates detailed chapter outlines

– World Builder: Creates rich, consistent settings and locations

– Writer: Generates the actual prose for each chapter

– Editor: Reviews and improves content for quality and consistency

3. Outline Generation

– The OutlineGenerator class initiates the outline creation process using:

– The initial prompt

– The number of chapters

– The specialized agents

– The outline includes:

– Chapter titles

– Key events

– Character developments

– Settings

– Tone for each chapter

– The generated outline is saved to book_output/outline.txt

4. Book Generation

– The BookGenerator class takes the outline and begins chapter-by-chapter generation

– Chapters are written sequentially, with each chapter proceeding only after the previous is complete

– For each chapter:

5. Chapter Creation Process

Group Chat Initialization:

– A managed conversation is created between the agents with the chapter context

– The outline context is provided to all agents

Collaborative Writing Sequence:

– Memory Keeper: Provides context update for story continuity

– Writer: Drafts the chapter (tagged with “SCENE:”)

– Editor: Reviews and provides feedback

– Writer Final: Creates the final revised version (tagged with “SCENE FINAL:”)

Content Verification:

– The system verifies chapter completion by checking that all required steps were completed

– Checks for minimum length (5000 words per chapter requirement)

– Ensures proper formatting and consistency

Chapter Saving and Memory Updates:

– The final chapter content is cleaned, removing artifacts and formatting

– Content is saved to book_output/chapter_XX.txt

– The Memory Keeper’s summary is stored for reference when writing future chapters

Error Handling:

– If chapter generation fails, a simplified retry process is attempted

– Uses a reduced agent set (User Proxy, Story Planner, Writer)

– Creates backup files when needed

6. Sequential Validation

– Each chapter is verified before proceeding to the next

– The system checks that previous chapters exist and have valid content

– Ensures narrative continuity throughout the book

– If validation fails at any point, the process stops

7. Completion

– When all chapters are successfully generated, the book is complete with:

– Properly structured chapters

– Character and plot consistency

– World-building elements maintained throughout

– Each chapter meeting the minimum content requirements

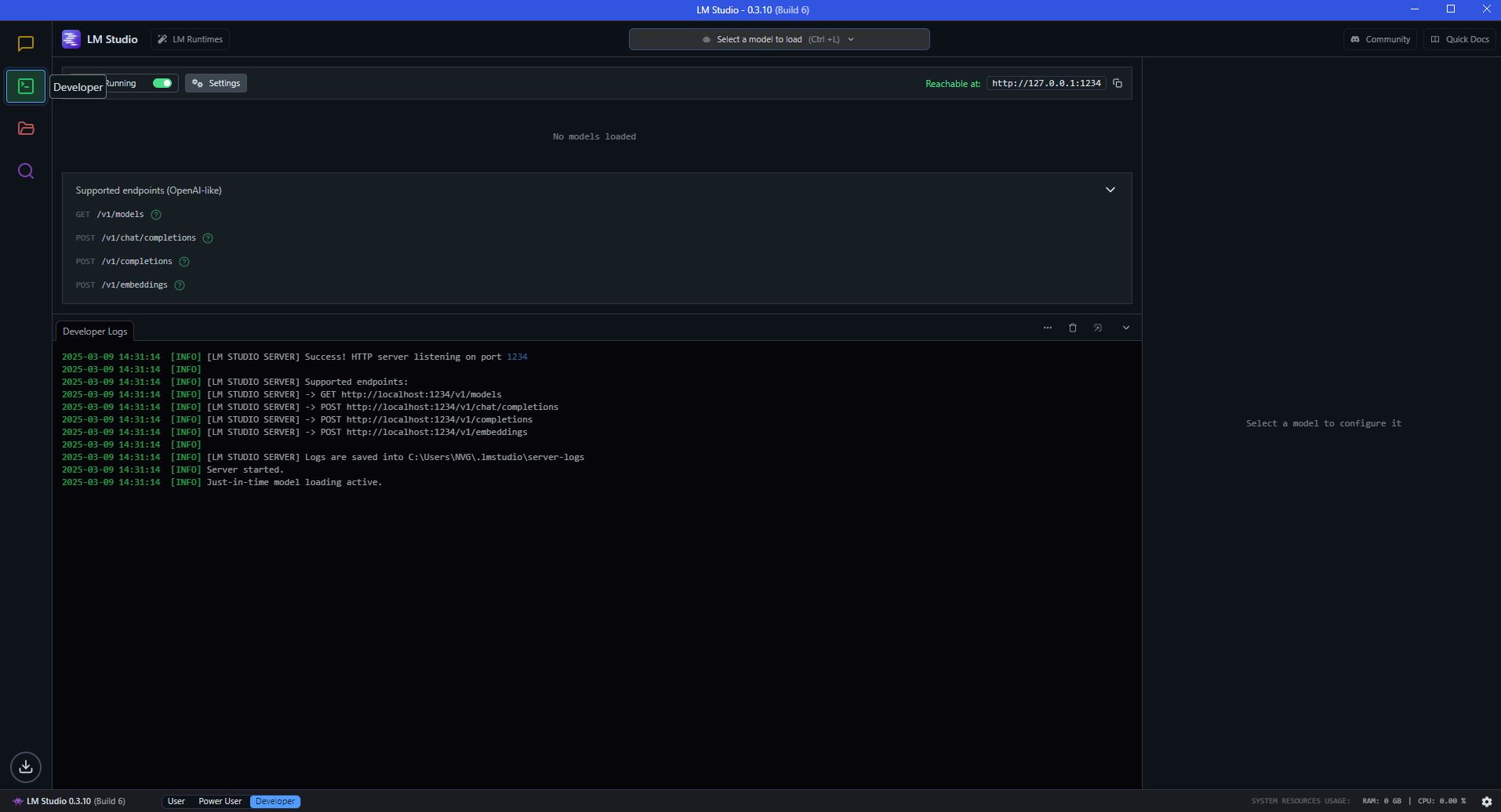

As for the LLM, I used LMStudio to run an LLM locally. I know this is not as powerful as calling, say, GPT or Claude, but it is much cheaper, as you are using your own GPU on your computer instead of paying for cloud tokens. Well, cheaper, yes, but slower and louder, as my GPU was trying to do laps around the room if it was not screwed into my PC case.

This whole process resulted in a bunch of txt files for each chapter. I did also have to still edit some of the chapters, as my bug fixes and enhancements did not cater for everything, so some cleanup was still needed.

Well, that is it then? Good job and on to the next project? Well, no, I seem to always try and over-engineer things, and this is no exception. What if we could turn this into an audiobook? Even better, a visual audiobook. With “someone” (yes, an AI) reading the book, and another AI creating images for each chapter.. No, wait.. each paragraph! Would that not be amazing! Challenge accepted! First test to see if I could just convert the text files into an audiobook. I ended up using Kokoro-TTS from Hugging Face, as I wanted something to run locally. Yes, there are much better text-to-speech options out there, but well, they cost money, and this one “we have at home”, as the proverbial mom would say. So, long story short, I downloaded the model locally, had my GPU fly around the room a bit, and ended up with a sound file for each chapter. The result was actually not too bad. It could rise and lower the tone based on what is happening in the scene, making it slightly less robotic. Feel free to have a listen to a sample chapter below.

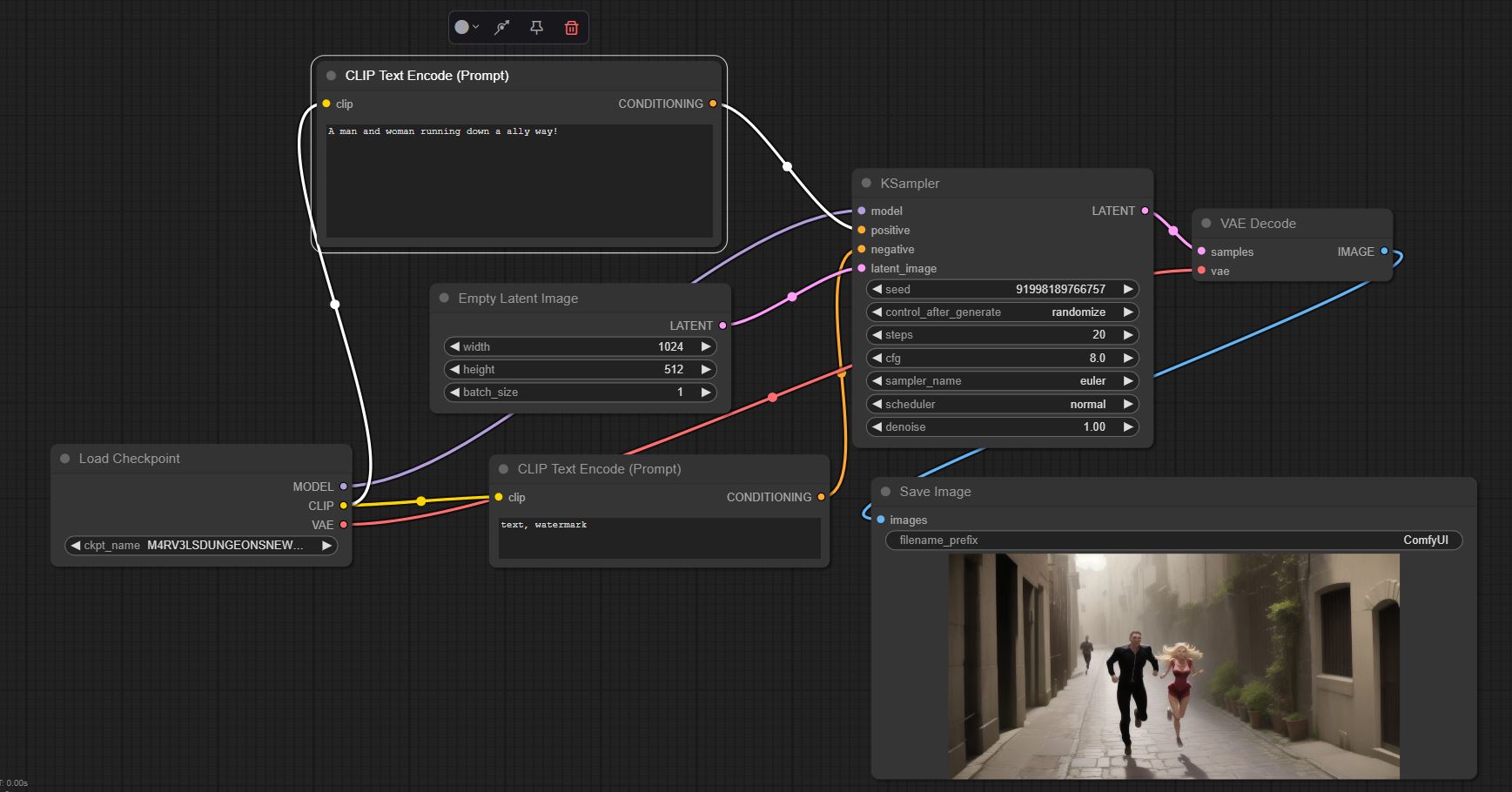

Right, that is the sound part done. Now, what about the images? I wanted to make an image for each paragraph. I ended up using ComfyUI and calling it via the built-in API from my Python script. I had to play around a bit with timeouts, etc., but in the end, this was a rather good option. I also installed a custom model from Civitai, a comic book model that gave an arty feel to the images being created. Although running it on low settings and limited steps, some of the images came out well, like generic AI images with multiple arms and legs, and sometimes just weird-looking images, but since this is more of a proof of concept (POC) I was building, I did not bother to put more time into creating better-looking images. Below is the flow in the frontend, although using the API, you basically recreate it via a JSON file from scratch in the script.

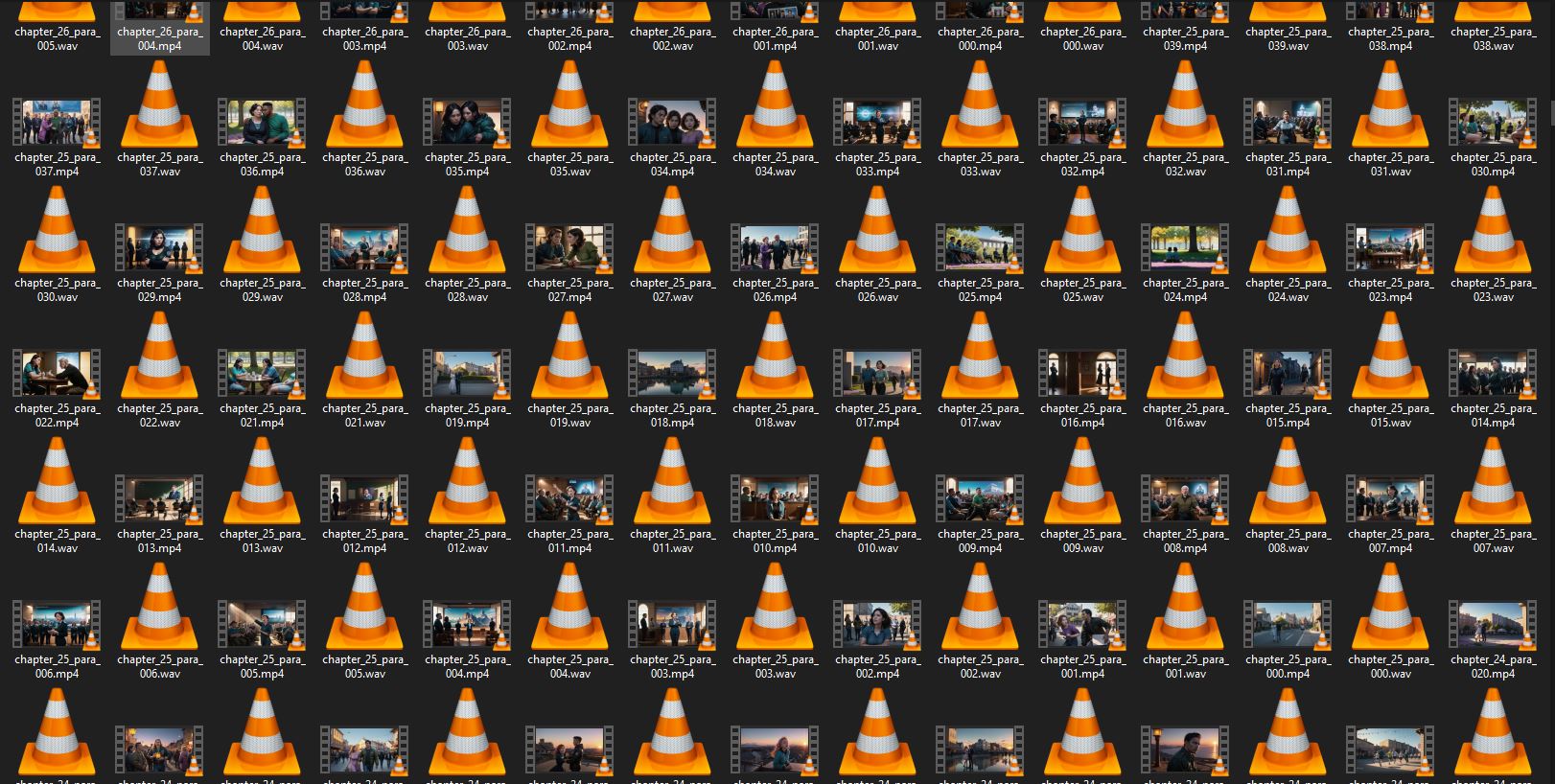

After the whole process finished, I ended up with 910 images for all the chapters. I will add that in the first attempt, the images being generated for each paragraph were all over the show with no consistency. I started just giving the actual words of each paragraph as input for the image generation. This had sad and funny results. For example, if the paragraph was calling someone a monster for being heartless, one would imagine an image of a woman yelling at someone in a fierce argument, but no, you would be wrong. Most of the time, one would get an actual monster holding a heart in its claw or something stupid. Next, I tried to use my local LLM again to take the chapter and then convert this into a prompt for the image generator to use. This worked better, but you still got images that were way out of context. What worked in the end was me giving the entire chapter and the specific paragraph to the LLM and telling it, based on the entire chapter, to come up with a prompt for this paragraph. This then resulted in much more consistent images being created. If you look at the screenshot below, you can actually see different scenes being depicted by the change in color and layout of each.

Once this step was complete, I would end up with a ton of short audio files and corresponding images. This was then combined into one to create a short video clip, just for one specific paragraph.

The last two steps would then be to combine all the small clips into one and then finally combine all chapters into one final video. I will admit this whole process did not go one hundred percent smoothly, as there were some issues with the script starting to combine the image and audio before the image was finished being rendered. This resulted in some scenes just having a white background and only the audio playing. Luckily, this only happened about 10 or so times, and I did not want to spend more time trying to debug this just to get the POC final video out the door.

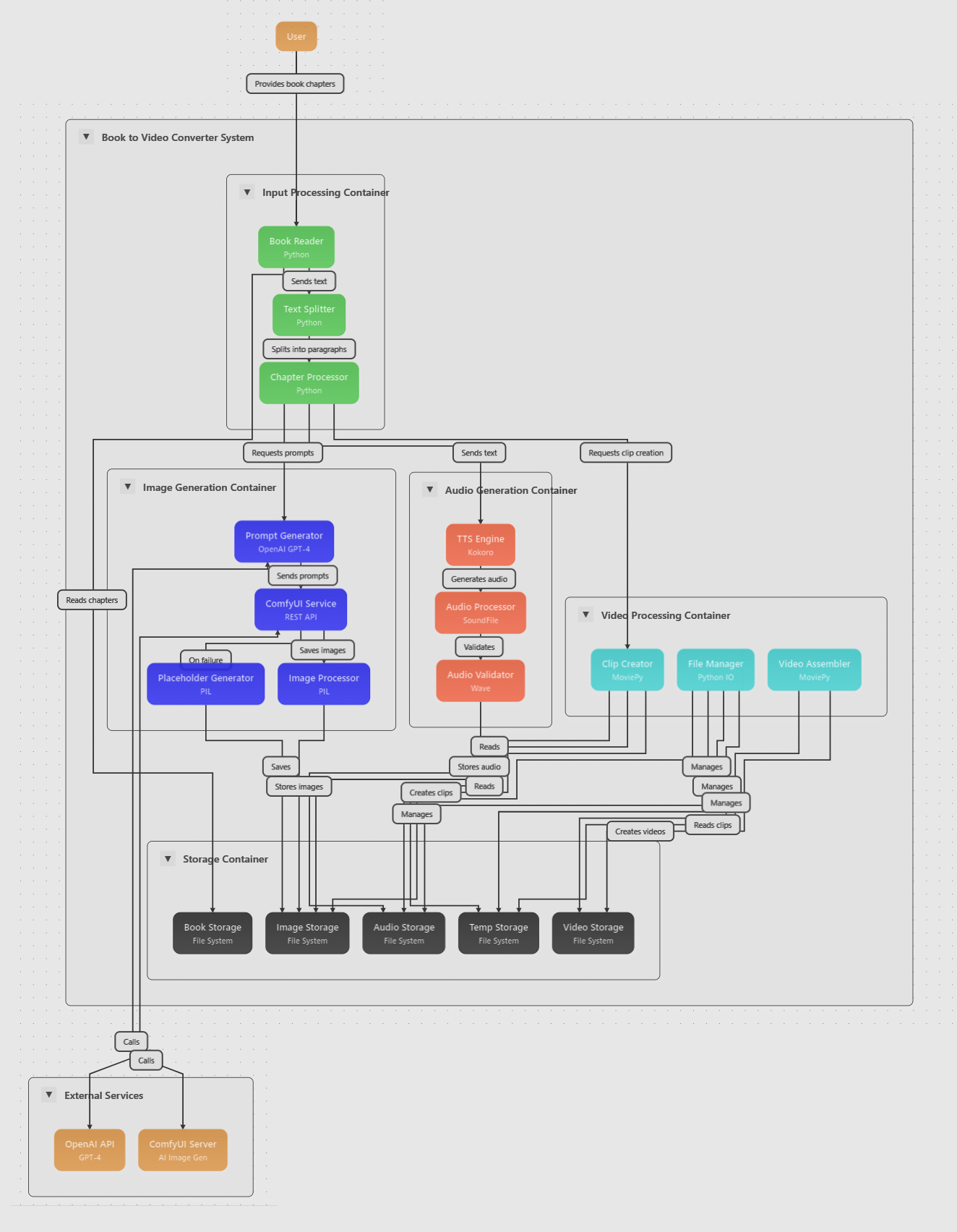

So again for those who want to dive a bit deeper in the making of the video section of my POC AI book here is a code layout explaining it in detail.

1. Input Processing Container

Book Reader:

– The system starts by reading text files from the book_output directory (located at E:/Projects/BookMaker/book_output)

– Each file represents a chapter of the book

Text Splitter:

– The text from each chapter is split into paragraphs using the split_into_paragraphs function

– The system skips the first line (CHAPTER X) of each chapter as specified in the memories

– Empty lines are also skipped

Chapter Processor:

– The process_chapter function orchestrates the conversion of each chapter

– The full chapter context is maintained and passed to subsequent processes for richer prompt generation

2. Image Generation Container

Prompt Generator:

– For each paragraph, the system calls a LOCAL LLM to generate an image prompt

– The generate_image_prompt function sends both the individual paragraph AND the full chapter context to the LOCAL LLM

– This provides better context for image generation as specified in the memories

ComfyUI Service:

– The generated prompts are sent to ComfyUI running on http://127.0.0.1:8000

– The comfy_image_gen.py module handles the ComfyUI API communication

– A JSON template defines the workflow with:

– A checkpoint model (M4RV3LSDUNGEONSNEWV40COMICS_mD40.safetensors)

– Image dimensions (1024×512)

– Negative prompts for improved quality

– Steps reduced from 100 to 20 (or 50 as shown in code) for faster generation

Placeholder Generator:

– If image generation fails, a placeholder image is created with the paragraph text

Image Processor:

– Generated images are saved to E:/Projects/BookMaker/Visual-Book/images

– The system waits for the image file to be fully accessible before proceeding

3. Audio Generation Container

TTS Engine:

– The Kokoro TTS system is used to convert paragraph text to audio

– The default voice used is ‘af_bella’

– Audio files are saved to E:/Projects/BookMaker/audio_output

Audio Processor:

– The system calculates the audio duration to properly sync with the images

4. Video Processing Container

Clip Creator:

– For each paragraph, the create_paragraph_clip function:

– Takes the generated image

– Combines it with the corresponding audio

– Creates a video clip with the image displayed for the duration of the audio

– Uses MoviePy for video creation

File Manager:

– Manages temporary files for the video creation process

Video Assembler:

– The combine_clips function combines all paragraph clips into a single chapter video

– Final videos are saved to E:/Projects/BookMaker/Visual-Book/videos

5. Storage Container

The system maintains several storage locations:

– Book Storage: Original text files in book_output

– Image Storage: Generated images in Visual-Book/images

– Audio Storage: Generated audio files in audio_output

– Temp Storage: Temporary files in Visual-Book/temp

– Video Storage: Final video outputs in Visual-Book/videos

6. External Services

– LOCAL LLM: Used for generating image prompts

– ComfyUI Server: Running locally for image generation (at port 8000)

Key Features and Optimizations

– Full chapter context is included when generating image prompts for richer image generation

– ComfyUI steps reduced from 100 to 20 for faster image generation

– Fallback to placeholder images if image generation fails

– Robust file access checking to avoid issues with locked files

– Error handling throughout the process

And that is a wrap folks. The end result is a 4 HOUR long audio book. Feel free to have a listen and if you make it to the end please feel free to let me know what you think.

In a world where emotions are commodified, taxed, and controlled, two unlikely allies emerge from the shadows of oppression. Aurek Voss, a creator of emotion capsules haunted by his sister’s mysterious illness, and Cora Ellin, a disillusioned Bureau worker who stumbles upon a conspiracy that threatens everything she knows. As the tyrannical Bureau tightens its grip on society, extracting emotional taxes and manipulating the populace, Aurek and Cora uncover the sinister truth behind Metastrum—a substance that promises emotional freedom but harbors devastating consequences. Their journey takes them through the vibrant yet dangerous Market of Emotions, into the clutches of ruthless cartels, and ultimately to the heart of the Bureau itself, where they must confront the architect of their society’s suffering. With revolution brewing in the streets and Soul Sickness claiming more victims each day, Aurek and Cora must navigate a treacherous landscape of moral ambiguity, personal sacrifice, and unexpected alliances. Together, they spark a movement that challenges not just who controls emotions, but what it means to feel in a world that has forgotten the value of authentic human connection. “The Market of Emotions” is a gripping tale of resistance, redemption, and the revolutionary power of embracing our most fundamental humanity in the face of systemic corruption.