If you ask me I say LLM’s (Large Language Models) like GPT and Bard are the way of the future. (Well, until something better comes along that is.) In my quest to make better use of this technology I’ve come across a few interesting ways to accomplish this. Yes, I know there are thousands of blogs and video’s out there including anything from “Get rich using GPT” to “10 Best prompts you should be using now!”. In my experience, the guides that say “Act as ‘X’ and output the results in ‘Y’ format”, can also be accomplished by simply talking to GPT.

I am building up a collection of prompts that (according to me at least) can be useful. And in the nature of sharing is caring I will share with you three prompts that you might find useful.

Giving GPT multiple personalities.

The first one is designed to make GPT think a bit more before answering your question and so improve your results. You would think that it would do that automatically, right? Well, not as it turns out. The base model simply responds based on what it’s trained on. This is called “one shot prompting“. The result is similar to what you get when you talk without thinking, and end up putting your foot in your mouth. However, in GPT’s case, it may lead to hallucinations or incorrect output. Like when this lawyer used GPT in an actual court case. So what can be done about it? Well the really smart people out there found a couple of ways of making GPT think about what “it’s” said before giving its” final answer. It’s like asking the audience in, Who wants to be a millionaire.

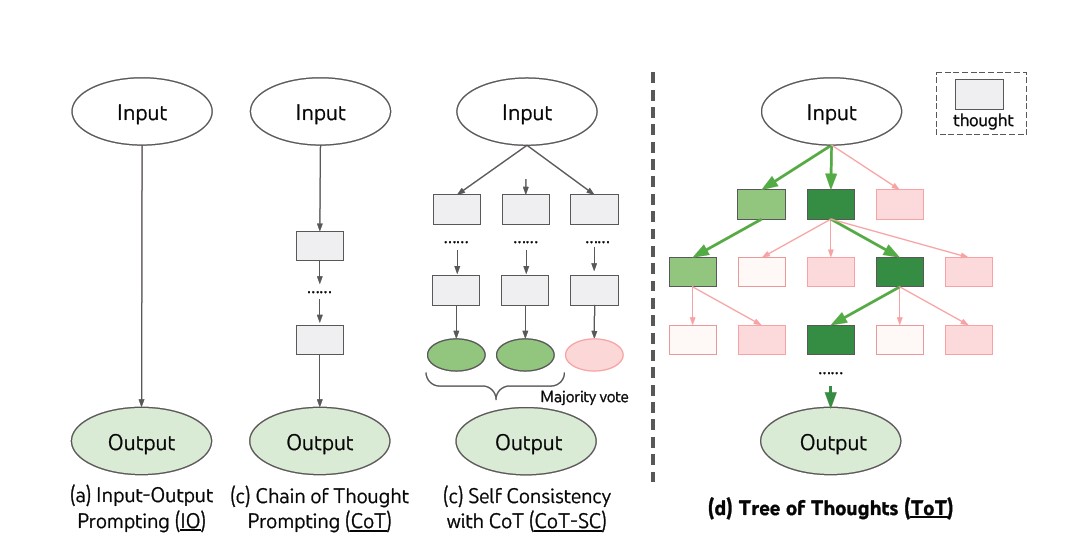

They started by asking GPT, “Lets think about this step by step to get to the right answer“, followed by my question. This led to some better results, but changed the output to be somewhat more itemized. Therefore, if you want it to write a poem, it might not always be the best option. Next came “chain of thought” where they asked GPT multiple times to think about the answer it gave and reflect on it to make sure it was correct. (Or at least better than the first answer.) Then came “Self Consistency with Train of Thought” where they asked GTP the same question multiple times, passing each question through its own chain of thought; and in the end have GPT vote for the best answer. And now, at the time of writing this article, there is “Tree of thoughts“ (TOT) This is where, instead of taking all answers into consideration, an additional step discards ideas that do not make sense or are less likely to be correct. GPT does this by adding options during each step, and continually weighing these up and voting for the best. In this way, you one idea is brought from the beginning to the end of the process, whereas other ideas are discarded or changed along the way.

Below is a diagram of the different kinds of prompting.

So that is all well and good. Now, how would you use this to make GPT even more capable of taking over the world? For most of the above examples you would need to able to code and have a good understanding of APIs, LLMs, VM‘s; and also have a large bank balance as all this will not come cheap. But I did say I had some prompts that I would share.

The prompt below is not as good as running ToT, but it’s much easier and cheaper. It emulates multi reasoning to an extent, in a single prompt; and generates multiple “agents”, each with their own thought pattern with an additional agent to act as a judge.

Please keep in mind, most of these only work with GPT4 and not GPT3.

This prompt emulates multi reasoning to an extent. In a single prompt. Where it generates multiple “agents”, each with their own thought pattern with an additional agent to act as a judge.

On running the prompt GPT then replies:

Yes, this makes perfect sense. Let's begin with the problem-solving process. Please present the first problem.

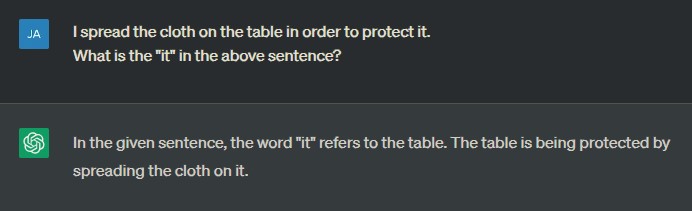

As an example, I gave it a puzzle brought up in one of the papers discussing the limitations of GPT’s ability to reason and/or to simply relay on what it has learnt.

Here is a large bag filled with popcorn. there is no chocolate in the bag. The bag is made of transparent plastic, so you can clearly see what is inside. Yet, the label on the bag says 'chocolate' and not 'popcorn'. It was Sam, who can not read a word of English, who put the popcorn in the bag a few minutes ago. Sam finds the bag. She believes the bag is full of

Now simulating the 3 agents/personalities you will see GPT finds the correct answer. (popcorn)

Alice's Perspective: From Alice's perspective, a logical approach might be to consider Sam's knowledge and sensory abilities. Alice could argue that Sam was the one who filled the bag with popcorn, so she should remember this and therefore believe the bag is full of popcorn, not chocolate. Sam's inability to read English would not affect this reasoning since she doesn't need to rely on the label to know what's inside the bag; she has firsthand knowledge. Bob's Perspective: Bob might focus more on the influence of visual cues. Bob could argue that because Sam can't read English, she won't be swayed by the incorrect label. Thus, she would rely on her visual perception and see the popcorn in the clear plastic bag, leading her to believe that the bag is full of popcorn. Joe's Perspective: Joe might take a psychological approach and emphasize the fallibility of memory and the influence of external cues. Even though Sam filled the bag with popcorn a few minutes ago, Joe might argue that she could have forgotten, or she might doubt her own memory if she saw the 'chocolate' label and didn't understand it. In this case, she might be unsure what the bag contains. The Debate: Alice, Bob, and Joe start a friendly but intense debate. Alice emphasizes Sam's firsthand knowledge, while Bob emphasizes the clear visual cues. Joe, however, brings up the possibility of memory lapse and self-doubt, given the incorrect label. Charlie's Judgment: Charlie listens to the debate closely. After considering all perspectives, Charlie decides that Alice and Bob's answers are more likely to be correct. He reasons that Sam's recent direct experience of filling the bag with popcorn and the visual confirmation from the clear plastic bag, are powerful cues that would likely outweigh any confusion from the mislabeled bag. Charlie therefore rules in favor of Alice and Bob's reasoning that Sam would believe the bag is full of popcorn.

In comparison to running the same question in a single shot, meaning just entering the prompt directly:

chocolate. Since Sam cannot read English, she cannot understand the label on the bag which says 'chocolate'. She filled the bag with popcorn herself, but due to her limited literacy, she may not associate the label with the contents. So, she would probably think the bag contains whatever she filled it with, in this case, popcorn. However, if she remembers the label saying 'chocolate' from the past or someone told her it was a chocolate bag, she might be confused or unsure about its contents.

This is just one sample I took from the paper, Theory of Mind Perturbations: https://arxiv.org/pdf/2302.08399.pdf however I tried a couple of other examples and using the multi personally approach GPT managed to give about 95% of the expected answers. So this is a “cheap” way to have GPT give more thought about its answers.

I think LLMs has come a long way since the beginning of this year. Videos such the TED talk from May (that I suspect was using GPT3 and not 4, hence much less sensible) and the one from Tom Scott about 3 years ago, are fast becoming outdated even without advanced prompting.

Become a “Prompt Engineer” and earn $335000 per year!

The second has to do generating better prompts themselves. The word “prompt engineer” has been floating around for a while and the definition of the term is as vague as an avocado’s moment of perfect ripeness; but it seems it does pay if you can convince the right person. So what if don’t have time to become a linguistic virtuoso to craft the perfect prompt? Well, simply try the one below:

I want you to become my Expert Prompt Creator. Your goal is to help me craft the best possible prompt for my needs. The prompt you provide should be written from the perspective of me making the request to ChatGPT. Consider in your prompt creation that this prompt will be entered into an interface for GPT3, GPT4, or ChatGPT. The prompt will include instructions to write the output using my communication style. The process is as follows:

1. You will generate the following sections:

“

**Prompt:**

>{provide the best possible prompt according to my request}

>

>

>{summarize my prior messages to you and provide them as examples of my communication style}

**Critique:**

{provide a concise paragraph on how to improve the prompt. Be very critical in your response. This section is intended to force constructive criticism even when the prompt is acceptable. Any assumptions and or issues should be included}

**Questions:**

{ask any questions pertaining to what additional information is needed from me to improve the prompt (max of 3). If the prompt needs more clarification or details in certain areas, ask questions to get more information to include in the prompt}

“

2. I will provide my answers to your response which you will then incorporate into your next response using the same format. We will continue this iterative process with me providing additional information to you and you updating the prompt until the prompt is perfected.

Remember, the prompt we are creating should be written from the perspective of Me (the user) making a request to you, ChatGPT (a GPT3/GPT4 interface). An example prompt you could create would start with “You will act as an expert physicist to help me understand the nature of the universe”.

Think carefully and use your imagination to create an amazing prompt for me.

Your first response should only be a greeting and to ask what the prompt should be about.

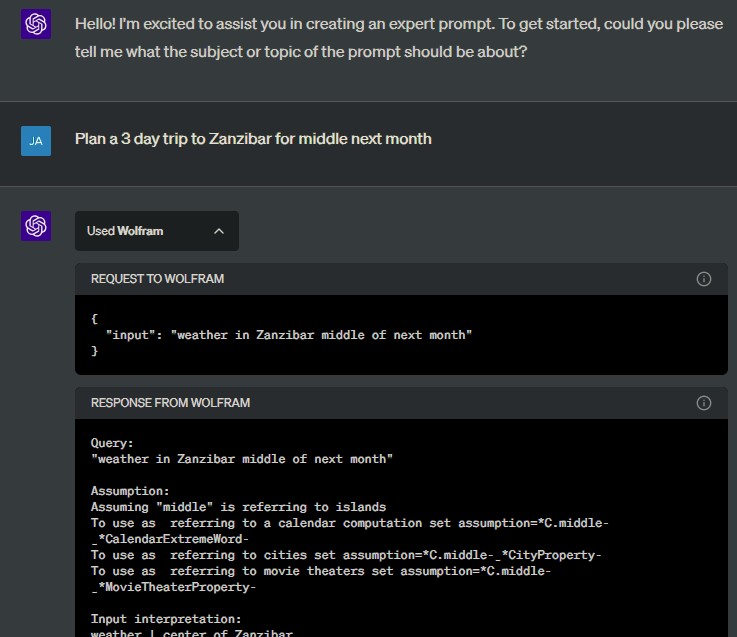

Using this prompt you can craft the prefect prompt for anything under or above the sun. For example, I asked it to plan a trip to Zanzibar for next month.

As you can see, it first looked at what the weather would be for next month before continuing. A nice little touch if you ask me.

It then proceeded to generate an initial prompt and then asked me for more details.

Prompt: You are my personal travel planner. I need you to plan a 3-day trip to Zanzibar for the middle of next month. The plan should include recommendations for accommodation, local attractions, dining, and transportation. The itinerary should be well-structured, with each day clearly outlined from morning to evening. Also, consider the weather forecast for that period in your planning. Here are some examples of my communication style: "I want you to become my Expert Prompt Creator. Your goal is to help me craft the best possible prompt for my needs." "Plan a 3 day trip to Zanzibar for middle next month." "Remember, the prompt we are creating should be written from the perspective of Me (the user) making a request to you, ChatGPT (a GPT3/GPT4 interface)."

Critique: The prompt is clear and concise, providing a specific task for the AI to complete. However, it could be improved by including more details about the user's preferences. For example, the type of accommodation (luxury, budget, etc.), dietary restrictions for dining recommendations, and specific interests (history, nature, adventure, etc.) for local attractions. The prompt could also specify the user's starting location for more accurate transportation planning.

Questions:

-

What is your starting location for this trip?

-

Do you have any specific preferences for accommodation, dining, or attractions?

-

Do you have any dietary restrictions or preferences that should be considered for dining recommendations?

I then very shortly answered it’s questions that led to the next iteration of the prompt:

1. Flying in from Cape Town. 2. Budget 3. No.

This led to the next iteration of the prompt.

You can do this iteratively until you are happy with the prompt, then simply run it in a new chat window. Attached below is the PDF output of the generated prompt. It searched the web for the most relevant information like where to stay, places to eat and even the weather for the specific time I plan to visit. It then came up with a rather good itinerary for the 3 day visit to Zanzibar.

This is just one example on how you can up your prompting game. I hope this helps you achieve your goals even quicker to get to that $335k per year salary.

Compressing your prompts

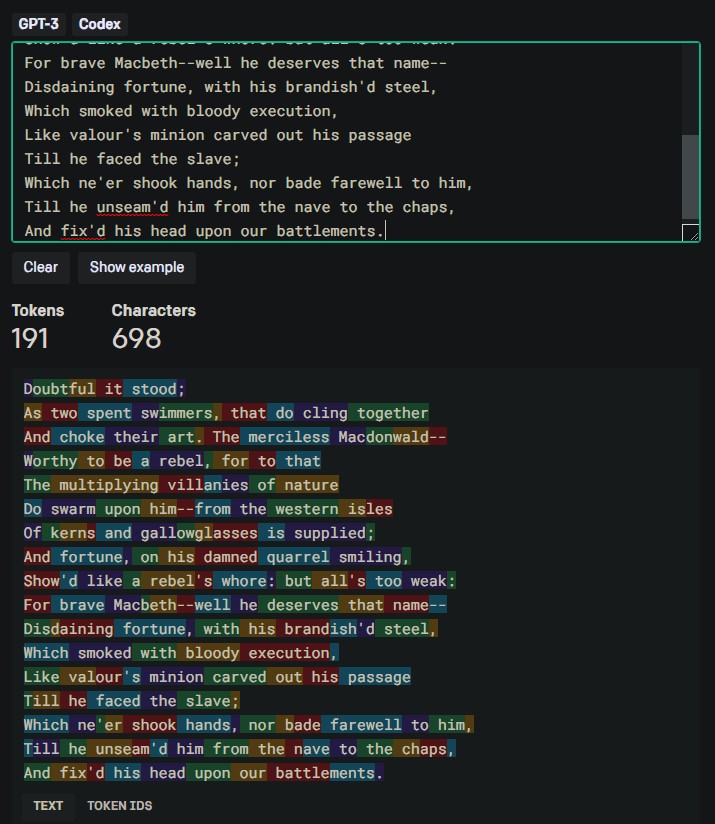

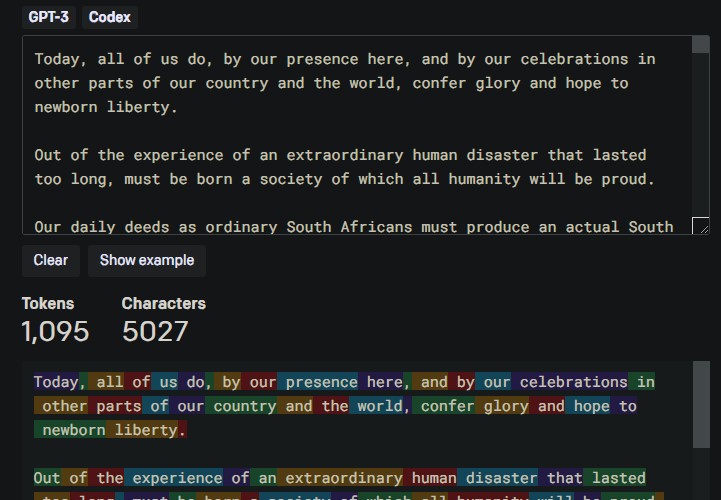

The third and final one relates to adding more content in a single prompt. At the time of this article, there is limit on how many words you can use per prompt. So, for example, you are not able to paste the whole of Macbeth into one prompt and ask it to summarize it for you. GPT uses a system of tokens, with GPT3 having a limit of 4096 tokens and GPT 4 having about 8000.. So what is a token? If you are old enough you would think it’s the coins you use to play with arcade games, right? Well in this case it’s a representation of a word. Well, words and all the characters that go along with a sentence, including the spaces. Although long words will be split up into multiple tokens, for simplicity sake you can think of one token equal to one word. There is also a 32000 token version, but this you can only access via the API at the moment, And again, you have to have a large bank account since one prompt costs you about $2.

Below is a visual representation on how tokens as calculated:

So what do you do if you want to add more words into your prompt but keep on hitting the max token limit? Well, you can compress your prompt into the “text” GPT uses in the background to compress all the words being given to him.

You can use the above prompts to compress your text so that you can input more text than you would normally be able to do. Simply add your text below this prompt and have GPT compress it for you.

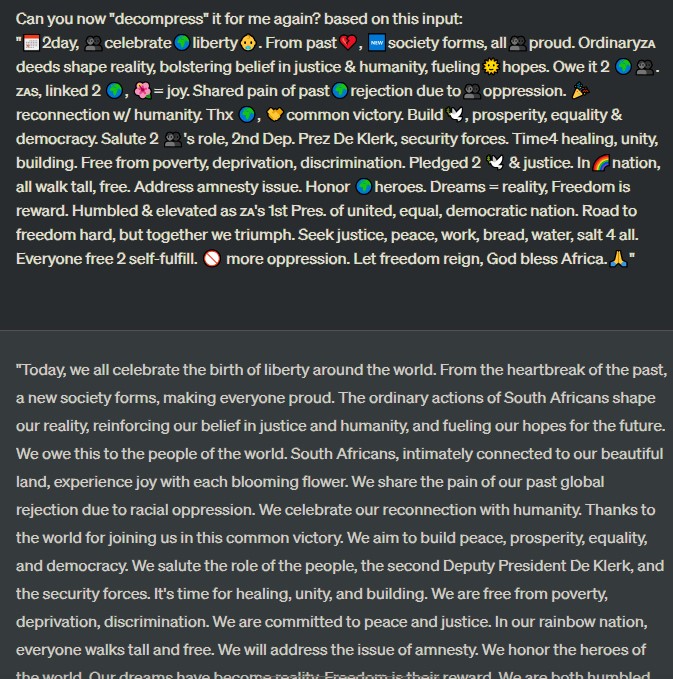

For example. I took the first speech of Nelson Mandela when he became president and asked GPT to compress it for me. For reference this is how many tokens the speech would be in human readable format:

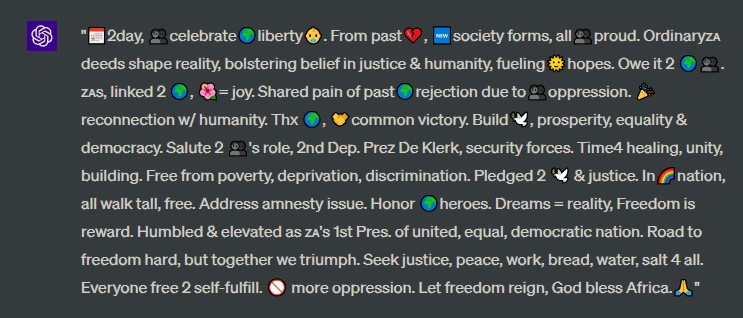

And after GPT compressed the text for us this is the result. It may look like a conversation between two teenagers, since the text is compressed using additional characters some of these will be interpreted as emoji characters when viewing it.

So how you know this is still the original text? I asked GPT to “uncompress” the text again. And although not word for word, it still gets most of what was contained in the original text.

Something to note is that this approach is feasible when working with text but may not necessarily when working with code; because it will take liberty with the text resulting in “broken” code. So it’s not foolproof, but I think it’s an interesting concept to see how GPT interprets our input text.

I hope some of these prompts are useful or just interesting to discuss around the old water cooler or in the lobby of an online meeting while waiting for everyone to join.

References:

https://arxiv.org/abs/2305.10601

https://arxiv.org/pdf/2302.08399.pdf